Model-driven Indoor Scenes Modeling from a Single Image

Zicheng Liu, Yan Zhang, Wentao Wu, Kai Liu, Zhengxing Sun

Accepted to Graphics Interface Conference (Proc. GI 2015)

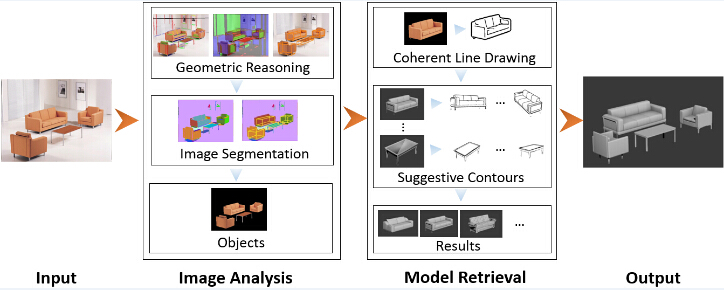

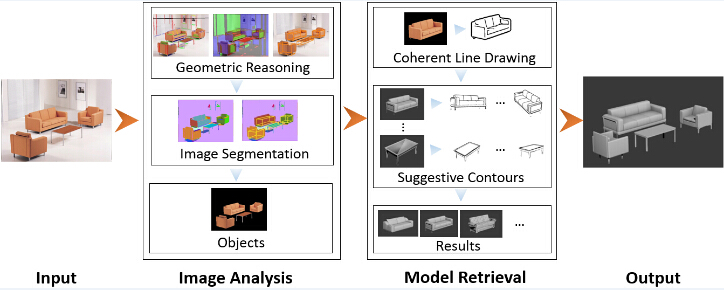

In this paper, we present a new approach of 3D indoor scenes modeling on single image. With a single input indoor image (including sofa, tea table, etc.), a 3D scene can be reconstructed using existing model library in two stages: image analysis and model retrieval. In the image analysis stage, we obtain the object information from input image using geometric reasoning technology combined with image segmentation method. In the model retrieval stage, line drawings are extracted from 2D objects and 3D models by using different line rendering methods. We exploit various tokens to represent local features and then organize them together as a star-graph to show a global description. Finally, by comparing similarity among the encoded line drawings, models are retrieved from the model library and then the scene is reconstructed. Experimental results show that, driven by the given model library, indoor scenes modeling from a single image could be achieved automatically and efficiently.

PAPER: Model-driven Indoor Scenes Modeling from a Single Image

supplementary material

Overview

An overview of our approach. With an input indoor scene image, the output 3D scene is reconstructed with a two-stage process: image analysis and model retrieval.

Evaluation

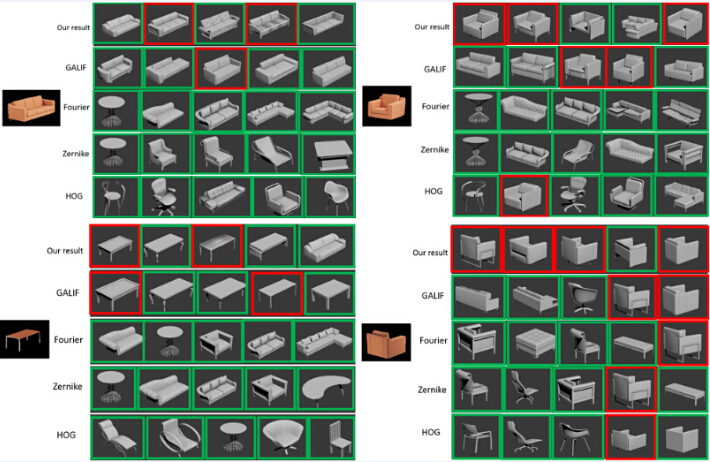

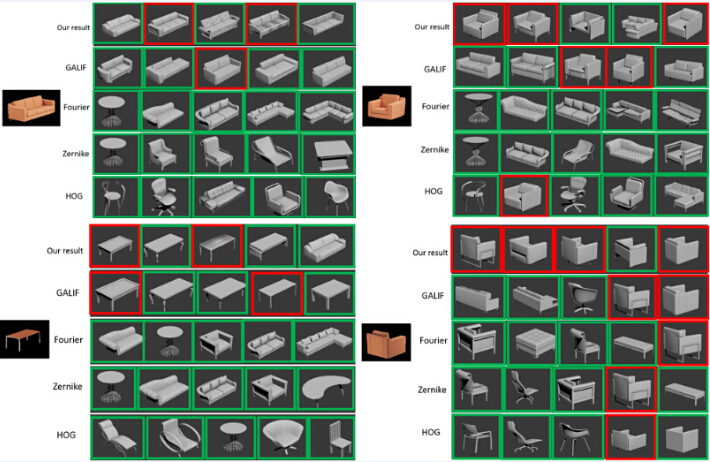

Retrieval results for one scene using different feature descriptors. Red frame indicates the model belongs to the Ground Truth while green frame indicates it beyond the Ground Truth. (Please refer to the supplementary material for clear image)

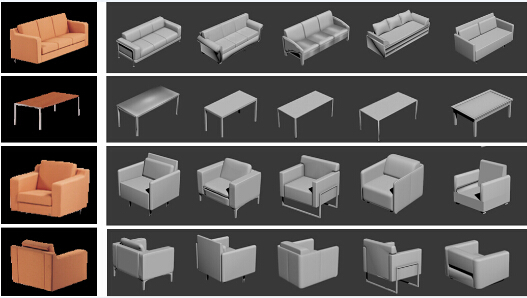

Ground Truth from iterative method.

Other Scenes

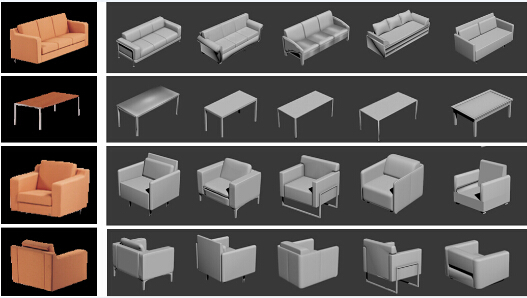

Our modeling results for other scenes.

PAPER: Model-driven Indoor Scenes Modeling from a Single Image

supplementary material